The AICommunityOWL is a private, independent network of AI enthusiasts. It was founded in 2020 by employees of Fraunhofer IOSB-INA, the OWL University of Applied Sciences (TH OWL), the Centrum Industrial IT (CIIT) and Phoenix Contact. Together, we believe in digital progress through the use of machine learning. We want to create sustainable solutions for the challenges of the future: industry, mobility, smart buildings and smart cities – and above all, for people!

The Machine Learning Reading Group (MLRG) of the AICommunityOWL has the goal to get a better understanding of current trends in machine learning on a technical level. The target audience are researchers and practitioners in the field of machine learning. We read and discuss current papers with a high media impact or prominent positioning (at least orals) of the leading conferences, e.g. NeurIPS, ICML, ICLR, AISTATS, UAI, COLT, KDD, AAAI, CVPR, ACL, or IJCAI. Attendees are expected to have read (or skimmed) the papers that are going to be presented so as not to be thrown off by the notation or problem statement and to be able to participate in informed discussions related to the paper. Suggestions for future papers are encouraged, as are volunteer presenters.

We hold our next online meeting on Tuesday, April 12th, at 16:00 under this link.

Don’t miss the date and save the event to your calendar:

Next Session Title:

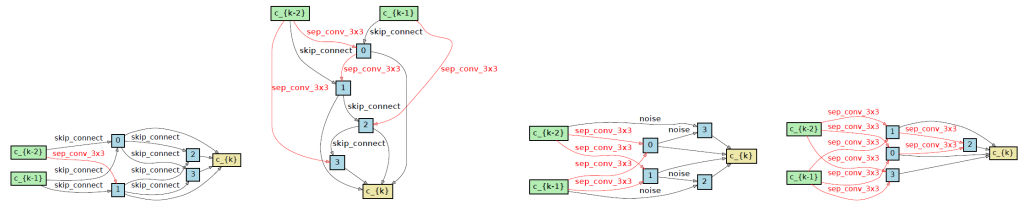

Rethinking Architecture Selection in Differentiable NAS

https://arxiv.org/pdf/2108.04392

Abstract:

In the domain of Neural Architecture Search (NAS), differentiable NAS remains as one of the most popular methods due to its search simplicity and efficiency. This is achieved by combining the optimization of model weights and the architecture parameters via a weight-sharing supernet through gradient-based algorithms. The selected largest architecture parameters towards the end of the search operation is consequently used to be the final architecture. However, this is done using the implicit assumption that the values of network architecture parameters inherently reflect the search operation strength. Surprisingly, the architecture selection process has received very little research and attention, whereas, the supernet’s optimization is researched in depth.

Speakers:

Arjun Majumdar (TH OWL)

For questions or suggestions of topics, feel free to contact markus.lange-hegermann@th-owl.de

Signup to the MLRG Mailing List to never miss another session!