The AICommunityOWL is a private, independent network of AI enthusiasts. It was founded in 2020 by employees of Fraunhofer IOSB-INA, the OWL University of Applied Sciences (TH OWL), the Centrum Industrial IT (CIIT) and Phoenix Contact. Together, we believe in digital progress through the use of machine learning. We want to create sustainable solutions for the challenges of the future: industry, mobility, smart buildings and smart cities – and above all, for people!

The Machine Learning Reading Group (MLRG) of the AICommunityOWL has the goal to get a better understanding of current trends in machine learning on a technical level. The target audience are researchers and practitioners in the field of machine learning. We read and discuss current papers with a high media impact or prominent positioning (at least orals) of the leading conferences, e.g. NeurIPS, ICML, ICLR, AISTATS, UAI, COLT, KDD, AAAI, CVPR, ACL, or IJCAI. Attendees are expected to have read (or skimmed) the papers that are going to be presented so as not to be thrown off by the notation or problem statement and to be able to participate in informed discussions related to the paper. Suggestions for future papers are encouraged, as are volunteer presenters.

We hold our next online meeting on Tuesday, June 1st, at 16:00 under the following link.

Don’t miss the date and save the event to your calendar:

Next Session:

Nyströmformer: A Nyström-based Algorithm for Approximating Self-Attention

Abstract:

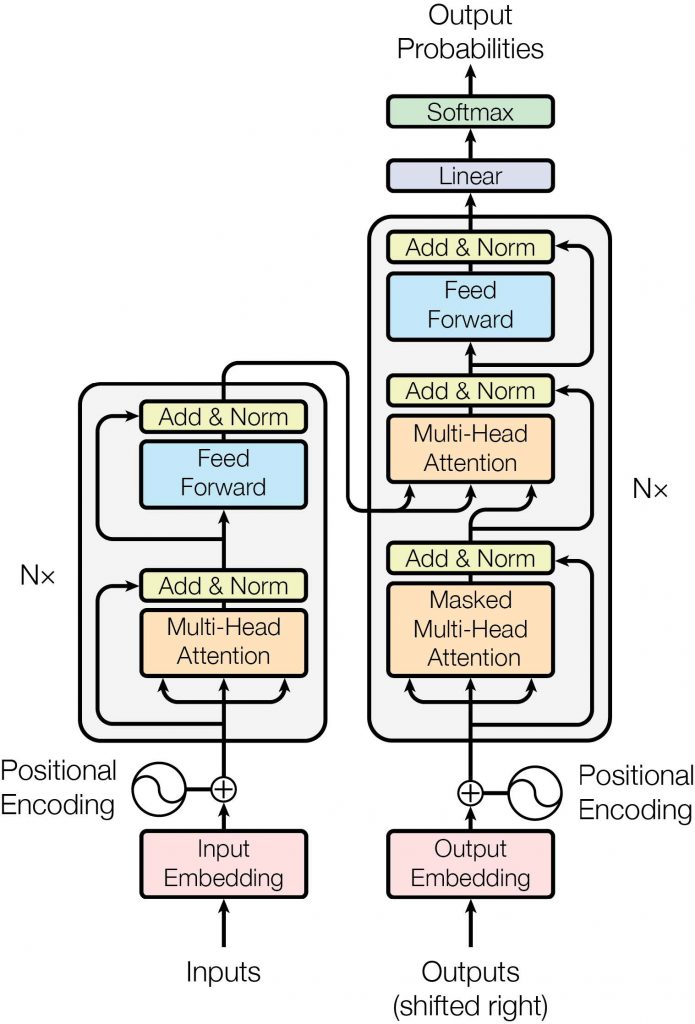

Since their introduction in 2017, Transformer have shown, that it does not require LSTMs or convolutional networks, but only a self attention mechanism in order to achieve state-of-the-art performance in many Natural Language Processing (NLP) tasks. Recent examples are BERT and GPT-3. The abandonment of recurrence and convolution speeds up training remarkably. Unfortunately, this only holds for short sequences, since the runtime and memory consumption of the self attention mechanism grows quadratically in terms of sequence length. We want to discuss several methods which have been proposed as remedies for such issues. One of these methods is the so called Nyström method which uses a matrix approximation technique.

Links:

Attention is all you need: https://arxiv.org/pdf/1706.03762.pdf

Nyströmformer: A Nyström-based Algorithm for Approximating Self-Attention: https://arxiv.org/pdf/2102.03902.pdf

Speakers:

Jörn Tebbe (TH OWL)

For questions or suggestions of topics, feel free to contact markus.lange-hegermann@th-owl.de

Feel free to join to join the official mailing list of the Machine Learning Reading Group under https://aicommunityowl.de/mlrg-list (low traffic). You will need the password: mlrgVerteiler

Looking forward for you to participate!